# Import the libraries we need for this lab

# Using the following line code to install the torchvision library

# !mamba install -y torchvision

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import torchvision.datasets as dsets

import torch.nn.functional as F

import matplotlib.pylab as plt

import numpy as npOne Hidden Layer

Training Two Parameter, Mini-Batch Gradient Decent, Training Two Parameter Mini-Batch Gradient Decent

Neural Networks with One Hidden Layer

Objective

- How to classify handwritten digits using Neural Network.

Table of Contents

In this lab, you will use a single layer neural network to classify handwritten digits from the MNIST database.

- Neural Network Module and Training Function

- Make Some Data

- Define the Neural Network, Optimizer, and Train the Model

- Analyze Results

Estimated Time Needed: 25 min

Preparation

We’ll need the following libraries

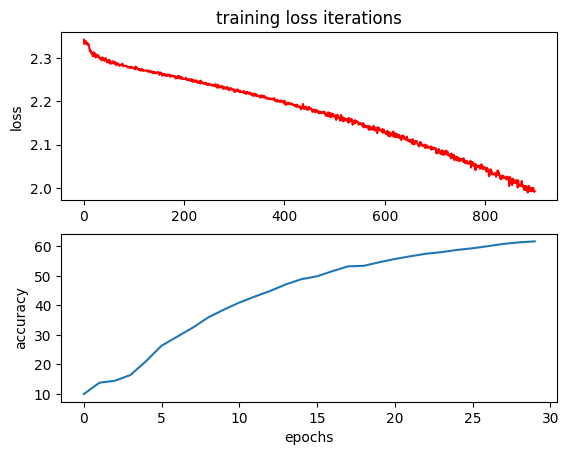

Use the following helper functions for plotting the loss:

# Define a function to plot accuracy and loss

def plot_accuracy_loss(training_results):

plt.subplot(2, 1, 1)

plt.plot(training_results['training_loss'], 'r')

plt.ylabel('loss')

plt.title('training loss iterations')

plt.subplot(2, 1, 2)

plt.plot(training_results['validation_accuracy'])

plt.ylabel('accuracy')

plt.xlabel('epochs')

plt.show()Use the following function for printing the model parameters:

# Define a function to plot model parameters

def print_model_parameters(model):

count = 0

for ele in model.state_dict():

count += 1

if count % 2 != 0:

print ("The following are the parameters for the layer ", count // 2 + 1)

if ele.find("bias") != -1:

print("The size of bias: ", model.state_dict()[ele].size())

else:

print("The size of weights: ", model.state_dict()[ele].size())Define the neural network module or class:

# Define a function to display data

def show_data(data_sample):

plt.imshow(data_sample.numpy().reshape(28, 28), cmap='gray')

plt.show()Neural Network Module and Training Function

Define the neural network module or class:

# Define a Neural Network class

class Net(nn.Module):

# Constructor

def __init__(self, D_in, H, D_out):

super(Net, self).__init__()

self.linear1 = nn.Linear(D_in, H)

self.linear2 = nn.Linear(H, D_out)

# Prediction

def forward(self, x):

x = torch.sigmoid(self.linear1(x))

x = self.linear2(x)

return xDefine a function to train the model. In this case, the function returns a Python dictionary to store the training loss and accuracy on the validation data.

# Define a training function to train the model

def train(model, criterion, train_loader, validation_loader, optimizer, epochs=100):

i = 0

useful_stuff = {'training_loss': [],'validation_accuracy': []}

for epoch in range(epochs):

for i, (x, y) in enumerate(train_loader):

optimizer.zero_grad()

z = model(x.view(-1, 28 * 28))

loss = criterion(z, y)

loss.backward()

optimizer.step()

#loss for every iteration

useful_stuff['training_loss'].append(loss.data.item())

correct = 0

for x, y in validation_loader:

#validation

z = model(x.view(-1, 28 * 28))

_, label = torch.max(z, 1)

correct += (label == y).sum().item()

accuracy = 100 * (correct / len(validation_dataset))

useful_stuff['validation_accuracy'].append(accuracy)

return useful_stuffMake Some Data

Load the training dataset by setting the parameters train to True and convert it to a tensor by placing a transform object in the argument transform.

# Create training dataset

train_dataset = dsets.MNIST(root='./data', train=True, download=True, transform=transforms.ToTensor())Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz

Failed to download (trying next):

HTTP Error 403: Forbidden

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-images-idx3-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-images-idx3-ubyte.gz to ./data/MNIST/raw/train-images-idx3-ubyte.gz100.0%Extracting ./data/MNIST/raw/train-images-idx3-ubyte.gz to ./data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz

Failed to download (trying next):

HTTP Error 403: Forbidden

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-labels-idx1-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-labels-idx1-ubyte.gz to ./data/MNIST/raw/train-labels-idx1-ubyte.gz100.0%Extracting ./data/MNIST/raw/train-labels-idx1-ubyte.gz to ./data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz

Failed to download (trying next):

HTTP Error 403: Forbidden

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-images-idx3-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-images-idx3-ubyte.gz to ./data/MNIST/raw/t10k-images-idx3-ubyte.gz100.0%Extracting ./data/MNIST/raw/t10k-images-idx3-ubyte.gz to ./data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz

Failed to download (trying next):

HTTP Error 403: Forbidden

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-labels-idx1-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-labels-idx1-ubyte.gz to ./data/MNIST/raw/t10k-labels-idx1-ubyte.gz100.0%Extracting ./data/MNIST/raw/t10k-labels-idx1-ubyte.gz to ./data/MNIST/raw

Load the testing dataset and convert it to a tensor by placing a transform object in the argument transform:

# Create validating dataset

validation_dataset = dsets.MNIST(root='./data', download=False, transform=transforms.ToTensor())Create the criterion function:

# Create criterion function

criterion = nn.CrossEntropyLoss()Create the training-data loader and the validation-data loader objects:

# Create data loader for both train dataset and valdiate dataset

train_loader = torch.utils.data.DataLoader(dataset=train_dataset, batch_size=2000, shuffle=True)

validation_loader = torch.utils.data.DataLoader(dataset=validation_dataset, batch_size=5000, shuffle=False)Define the Neural Network, Optimizer, and Train the Model

Create the model with 100 neurons:

# Create the model with 100 neurons

input_dim = 28 * 28

hidden_dim = 100

output_dim = 10

model = Net(input_dim, hidden_dim, output_dim)Print the model parameters:

# Print the parameters for model

print_model_parameters(model)The following are the parameters for the layer 1

The size of weights: torch.Size([100, 784])

The size of bias: torch.Size([100])

The following are the parameters for the layer 2

The size of weights: torch.Size([10, 100])

The size of bias: torch.Size([10])Define the optimizer object with a learning rate of 0.01:

# Set the learning rate and the optimizer

learning_rate = 0.01

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)Train the model by using 100 epochs (this process takes time):

# Train the model

training_results = train(model, criterion, train_loader, validation_loader, optimizer, epochs=30)Analyze Results

Plot the training total loss or cost for every iteration and plot the training accuracy for every epoch:

# Plot the accuracy and loss

plot_accuracy_loss(training_results)

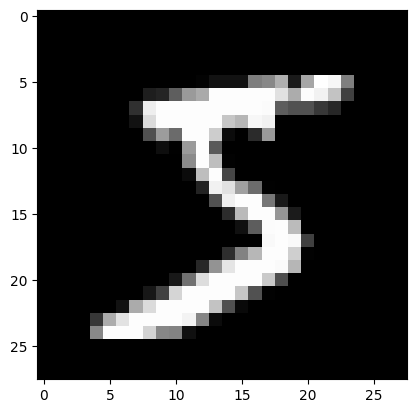

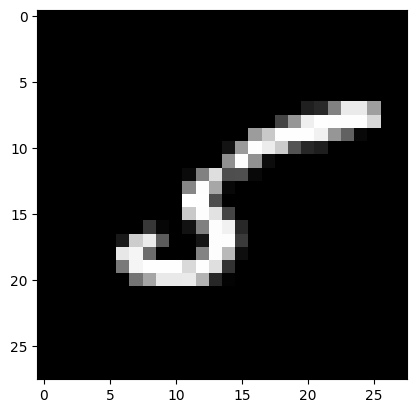

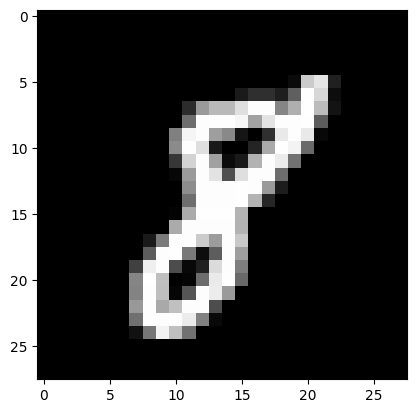

Plot the first five misclassified samples:

# Plot the first five misclassified samples

count = 0

for x, y in validation_dataset:

z = model(x.reshape(-1, 28 * 28))

_,yhat = torch.max(z, 1)

if yhat != y:

show_data(x)

count += 1

if count >= 5:

break

Practice

Use nn.Sequential to build exactly the same model as you just built. Use the function plot_accuracy_loss to see the metrics. Also, try different epoch numbers.

# Practice: Use nn.Sequential to build the same model. Use plot_accuracy_loss to print out the accuarcy and loss

# Type your code hereDouble-click here for the solution.